Amazon Wants to Put Alexa in Just about Everything

The online retail giant is teaming up with the semiconductor firm NXP to help put voice recognition technology in an array of gadgets—starting in your home.

April 13, 2017

What if your house was truly intelligent? And when you wake up in the morning, a cheery-sounding robotic voice asks if it should start brewing you a cup of coffee at six o’clock or whether you rather sleep in. What if your house knew your patterns, realizing what kind of news interests you, what time you need to head to work, and worked to meet those needs?

Or what if you worked from home the next day and had to meet a business contact for a late lunch? From your home office, you could say: “Alexa, book me a reservation for two at a good Thai restaurant within two miles at 2 p.m. today and order me a ride to get there.” And suddenly, with a single sentence, you’ve replaced the need to launch multiple apps.

Voice recognition technology is evolving quickly, putting sci-fi-esque scenarios like these within reach. Science fiction writers have seen this type of future coming for decades—think of HAL in the film 2001: A Space Odyssey or a computer verbally responding to Captain Kirk’s commands in the first season of Star Trek starting in the 1960s.

For voice technology to reach such heights, the current strategy of deploying voice functionality through a central hub must give rise to an ecosystem of networked devices. “The next phase in the evolution of voice recognition is that there will be an entity you can communicate with. You won’t be limited to talking to one particular device,” says Leo Azevedo, director, i.MX applications processors at NXP. “You can control things from wherever you are without using a smartphone. You could tell your refrigerator, your microwave oven—or any one point in your home network—to turn off a light in part of the house and it will work because all of those devices are interconnected.”

Opening Up Alexa and Connecting the Ecosystem

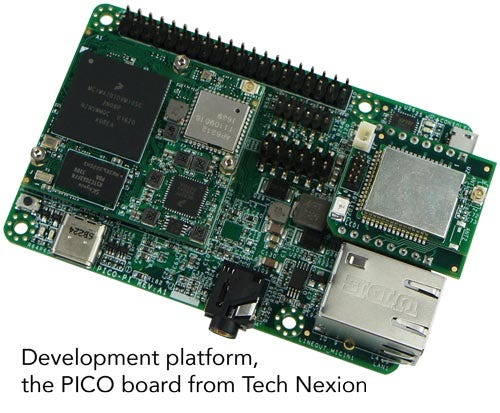

Today, Amazon and the semiconductor firm NXP are taking a step in that direction with their announcement that they are making available the entire commercial Echo experience to third parties. They have opened up the reference design supporting the same far-field speech processing found on the Echo to hardware manufacturers, allowing them to integrate Amazon Alexa into an array of gadgets.

Amazon is providing a development kit free-of-charge to manufacturers as part of an invite-only program designed to spur the growth of the Alexa platform and market—representing an extension of earlier efforts that incorporated Alexa functions in devices like the Pebble smartwatch and Raspberry Pi.

The move could help manufacturers, many of whom aren’t familiar with voice technology, to integrate Alexa into their products while preserving the user experience found in the Amazon Echo.

When it comes to designing voice-enabled products, some of the biggest challenges tend to be designing the front end, the microphone array, and capturing voice across the room. “Integrating cloud functionality on the back end was easy, which is why Amazon opened it up to everyone earlier,” Azevedo explains. “But all kinds of people have been trying to make Alexa-certified products, and Amazon was worried that, if end users bought a product and saw Alexa on the box, they would assume that the experience will be just like the Echo.” But if the experience didn’t live up to that, end users wouldn’t know if the problem is with the cloud or the microphone array or the design of the front end.

We see voice as the future, and our goal is to give OEMs a wide range of hands-free and far-field reference solutions to fit their needs.

The new kit is designed to optimize the user experience by working as a sort of blueprint for manufacturers, and it will include the same seven-microphone array found in Amazon Echo. “Since the introduction of Amazon Echo and Echo Dot, device makers have been asking us to provide the technology and tools to enable a far-field Alexa experience for their products,” says Priya Abani, director of Amazon Alexa. “With this new reference solution, developers can design products with the same unique 7-mic circular array, beam-forming technology, and voice processing algorithms that have made Amazon Echo so popular with customers.”

Let’s say a company that makes medical devices wants to embed voice control into a product. “They will get access to all of the design files and the code for that platform,” says Azevedo. “Because many of these devices are standard, like ultrasound, all they would need to do is to add it to their form factor. We have these modules that have software running on them. They could just get that module, put it into their unit, wire it to the microphones, punch some holes for the mic, and get voice added to the unit.”

Voice technology may seem poised to go everywhere, but making it so you can speak a command in environments ranging from a kitchen to your car without a dedicated hub is a relatively new reality. “We see voice as the future, and our goal is to give OEMs a wide range of hands-free and far-field reference solutions to fit their needs. We anticipate that we’ll see a wide variety of interesting use cases from device manufacturers,” says an Amazon spokesperson.

Increasing the number of Alexa-enabled devices in a household would improve Alexa’s effectiveness. “The circular microphone array and software that’s part of the kit allows OEMs to add the far-field capabilities seen in Echo to their devices, where users can interface with devices from any direction,” explains an Amazon spokesperson. “It’s ideal for products such as wireless speakers, sound bars, smart home hubs, and home entertainment systems.”

A hospital is an environment with a lot of devices that must be disinfected periodically after they are touched. Voice works quite nicely in situations like that.

There are also many potential applications for the technology in the industrial space and beyond. “A hospital is an environment with a lot of devices that must be disinfected periodically after they are touched. Voice works quite nicely in situations like that,” says Azevedo from NXP.

Amazon’s strategy to make Alexa accessible to developers has already worked for Alexa APIs. With more than 10,000 skills and counting, you can use Alexa to order a pizza or an Uber, plan a vacation, or find out how long a security line is at an airport via TSA’s public API—all without looking at a screen. While Gizmodo questions the usefulness of many of those apps, Amazon has a substantial lead over Google in terms of the volume of skills it supports.

The platform with the strongest ecosystem and most developers usually wins.

Already, many people in the tech community are beginning to see Alexa skills as a necessity—similar to how practically all companies are expected to have a website and a mobile app. “There really is no excuse not to have an Alexa skill. They don't take much developer effort to create one,” says Lisa Seacat Deluca, a tech strategist for IBM Commerce.

Deluca encourages Amazon to continue opening up its APIs and to allow Alexa skills to interact and leverage each other. She also says that there is room for improvement when it comes to the naturalness of uttering commands. For instance, Deluca developed an NFC-based app for sending a notification when a laundry wash cycle is complete. To use the app, a user would say: “Alexa, tell LaundryNFC to start my washer” or “Alexa, ask LaundryNFC how much time is left on my dryer.” “It’s weird,” Deluca writes. “If I were asking a real personal assistant I'd simply say: ‘Alexa, start my washer’ or ‘Alexa, how much time is left on my dryer?’”

Alexa as an Ever-Expanding Platform

“Voice interfaces that support natural language processing backed by bots and AI are the new UI,” says Chris Kocher, managing director at Grey Heron (San Francisco). “This will have a major impact on developers, apps, and UI design in the coming five to six years.”

But by opening up its software APIs previously and now with its hardware reference specifications, Amazon appears to be establishing a firm lead over rivals. “The platform with the strongest ecosystem and most developers usually wins,” Kocher says. “Look back at each generation of technology and the platforms around them: IBM in mainframes, DEC Vax systems in minicomputers, Sun’s Solaris operating systems in workstations, and IBM DOS on early PCs, Microsoft Windows on later PCs. The dominance of iOS and Android on mobile handsets is further evidence of this.”

But by opening up its software APIs previously and now with its hardware reference specifications, Amazon appears to be establishing a firm lead over rivals. “The platform with the strongest ecosystem and most developers usually wins,” Kocher says. “Look back at each generation of technology and the platforms around them: IBM in mainframes, DEC Vax systems in minicomputers, Sun’s Solaris operating systems in workstations, and IBM DOS on early PCs, Microsoft Windows on later PCs. The dominance of iOS and Android on mobile handsets is further evidence of this.”

Ultimately, tech companies are faced with little choice but to start integrating with Alexa or other voice recognition platforms like Siri, Cortana, Bixby, and Google Now. “Those that don’t will be left behind,” Kocher says. “This creates new opportunities for new developers to jump on the voice bandwagon and capture market share from those developers who cling to old app interfaces on mobile phones only.”

Security Questions

There are, of course, security ramifications of having powerful internet-connected microphones located throughout your environment. “My concern is voice activation without authentication is a huge security gap as other devices are enabled by Alexa,” says Craig Spiezle, executive director and CEO of the Online Trust Alliance (OTA) and strategic advisor to the Internet Society. “Amplifying the risk is the use of voice commands. As more devices rely on voice activation and keywords, leading devices do not have any user authentication for controlling other devices. While some have options for direct purchasing of additional products and services, little if any controls are in place from ‘unauthorized voices’ waking the device up and issuing commands such as ‘open my door’ or ‘turn my heat off.’”

The fact that Alexa is always listening is another consideration, which has come to bear in a recent case in Arkansas, where Alexa purportedly recorded evidence from a murder suspect.

The fact is that you have to be able to trust these devices.

“We take security very seriously at Amazon, and designing Alexa was no different. During the development process we provide documentation that includes functional and design requirements for AVS products,” explains an Amazon spokesperson.

“The fact is that you have to be able to trust these devices,” Azevedo says, stressing that NXP also prioritizes security. “As you distribute these device in the internet of things, you have to make sure they are protected. We want to ensure that not only these devices are secure but working with customers and offering them some algorithms to help check for threats.”

Azevedo says that, in the future, it is possible that voice technology will use voice identification. This could further improve both the security and convenience of the technology. “If I ask Alexa for my schedule, it would know to give me mine and not my wife’s,” he explains. There is also the possibility of using a supplementary technology to help identify individuals. Some people want to use cameras but because there is a certain element of distrust towards normal cameras, you might use infrared cameras or radar cameras that can detect your body shape and identify you.”

Looking to the Future

For now, the sky's the limit in terms of potential use cases and Amazon is upbeat about the possibilities but hesitant to share details. “I can’t speculate on future plans, but voice is a relatively new way of interacting with technology, and one that we think represents the future,” says an Amazon spokesperson. “Our goal is to provide developers with the tools they need to build successful voice experiences with Alexa. As part, we provide resources, tools, tips, templates and support along the way – that ranges from offering guidance for designing for voice, choosing an engaging skill topic and content strategy, or measuring engagement through analytics and implementing feedback. We think there’s a lot of potential in this space, and we’re working hard to innovate quickly. We’re constantly looking at ways to make interacting with and developing for Alexa better.”

Voice is a relatively new way of interacting with technology, and one that we think represents the future.

While we may be headed towards a sci-fi-like reality, the road there will be defined by discrete steps. “Right now, we are still at the phase where we are getting Alexa embedded into every device and getting all of the devices interconnected,” Azevedo explains. “The next phase will be: ‘how do you get these devices to perform better because they are interconnected so that they can follow your behavior, and you don’t have to think about it? Every room of your house will have this capability. The third step is going to be: Once these things are interconnected, how can they learn from each other?”

Azevedo is convinced that voice is the future. “I have two kids—two and a half and four and a half in age. They can turn on the lights, and they can play Disney songs because they can just say: ‘Alexa, play Disney songs,’ and that works—even though they can’t reach the light switch or operate the stereo in the usual way,” he says. “These kids who are growing into the world that you can control things by voice. We are older and have to unlearn using an app to gravitate towards the more-natural thing, which is just talking.”

About the Author

You May Also Like