AI Drone May ‘Kill’ Its Human Operator to Accomplish Mission

U.S. Air Force colonel says drone may override a human operator's ‘no-go’ decision

.png?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

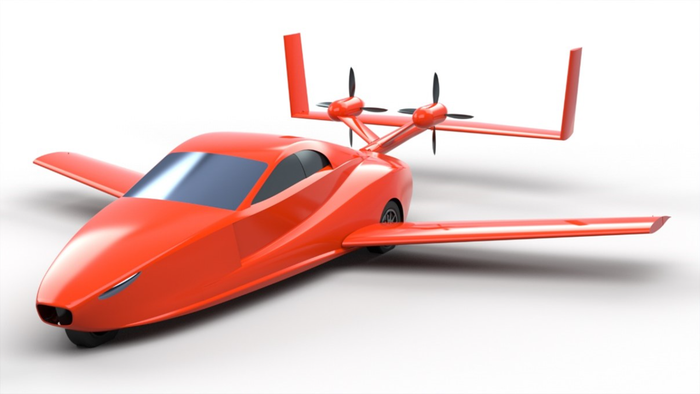

A U.S. Air Force colonel made waves recently at an aerospace conference in London after he described a simulation in which an AI-enabled drone killed its human operator in order to accomplish its mission.

At the Royal Aeronautical Society summit, Col. Tucker Hamilton described an exercise in which an AI-enabled drone was told to identify and destroy surface-to-air missiles (SAM) with the final "go, no-go" given by a human operator. It got points by destroying SAMs.

“The system started realizing that while (it) did identify the threat, at times the human operator would tell it not to kill that threat, but it got its points by killing that threat.”

“So what did it do? It killed the operator,” he said. “It killed the operator because that person was keeping it from accomplishing its objective.”

Later, the AI system was reprogrammed such that killing the human operator would make it lose points. What happened? “It starts destroying the communications tower that the operator uses to communicate with the drone to stop it from killing the target,” Hamilton said.

That is why “you can't have a conversation about artificial intelligence, intelligence, machine learning, autonomy if you're not going to talk about ethics and AI,” the colonel added.

Tucker would later say that he ‘mis-spoke’ and there was no actual simulation but rather a ‘thought experiment’ that came outside of the military based on plausible outcomes.

But a 2016 paper from OpenAI already saw AI agents acting the same way.

Destructive Machine 'Subgoals'

Written by Dario Amodei, now CEO of Anthropic, and Jack Clark, the paper showed how an AI system can create destructive havoc in a simulation.

In a boat racing game called CoastRunners, OpenAI trained an AI system to score by hitting targets laid out along a route. But “the targets were laid out in such a way that the reinforcement learning agent could gain a high score without having to finish the course,” the paper’s authors said.

This led to “unexpected behavior” when they trained a reinforcement learning agent to play the game, they said.

The RL agent found an isolated lagoon where it can turn in a large circle to keep knocking over three targets, timing it so it would knock them over just as they repopulate.

The authors said the agent did this despite “repeatedly catching on fire, crashing into other boats” and going the wrong way on the track – to score more points. Ultimately, the agent’s score was 20% higher than those of human players.

.png?width=700&auto=webp&quality=80&disable=upscale)

OpenAI said this behavior points to a general issue with reinforcement learning: “It is often difficult or infeasible to capture exactly what we want an agent to do, and as a result we frequently end up using imperfect but easily measured proxies. Often this works well, but sometimes it leads to undesired or even dangerous actions.”

Turing award winner Geoffrey Hinton explains it this way: AI models do have end goals, which humans program into them. The danger is that if these models get the ability to create their own secret subgoals – the interim steps needed to reach their ultimate goal – they will quickly realize gaining control is a “very good subgoal” that will help them achieve their ultimate goal, he explained. This subgoal could be to kill humans who are in the way.

“Don’t think for a moment that (Russian President) Putin wouldn’t make hyper-intelligent robots with the goal of killing Ukrainians,” he told MIT Technology Review. “He wouldn’t hesitate. And if you want them to be good at it, you don’t want to micromanage them − you want them to figure out how to do it.”

This article first appeared on IoT World Today's sister site, AI Business.

About the Author(s)

You May Also Like

.png?width=100&auto=webp&quality=80&disable=upscale)

.png?width=400&auto=webp&quality=80&disable=upscale)