Jetting to the Stars Using Containers for Development

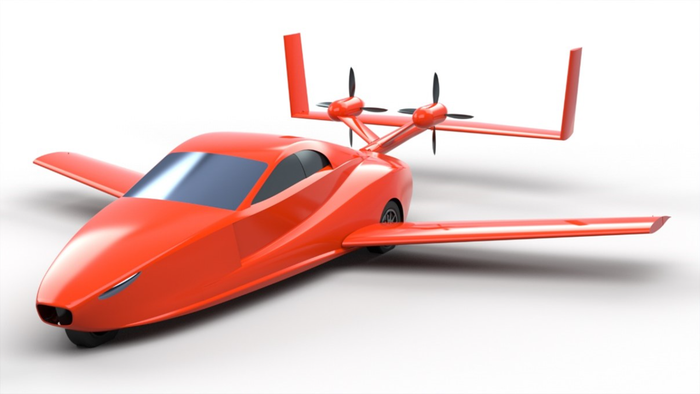

The Department of Defense develops for space travel, bombers and jets. It turned to containers for development to build and battle-test its mission-critical systems.

January 20, 2021

For an organization that sends rockets into space, stagnancy isn’t an option. Jetting to the stars requires technology, agility and a penchant for change.

But much like for-profit environments, the U.S. Department of Defense struggles to move at the pace of business as it travels through the galaxy. It needs software development practices and platforms that promote resiliency, prevent vendor lock-in and reduce vulnerability to malicious actors.

“Timeliness is a key factor to success,” said Nicolas Chailian, chief software officer at the U.S. Air Force. “We must rapidly be able to adapt to challenges, whether it’s for AI, machine learning, cyberthreats or simply being able to compete in this world of fast-paced technology.”

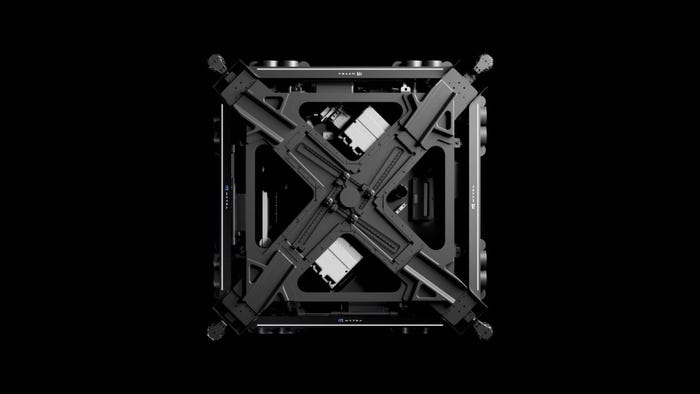

The DOD develops for jets, bombers and space using a variety of systems and cloud computing resources, so its large teams need a platform-agnostic development environment to transcend programming languages and provide a common denominator. This “abstraction” is key to prevent security vulnerabilities, integration problems and coding errors.

<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

Register for IoT World 2021 here.

<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

That’s why the DOD turned to DevSecOps (which unifies development, operations and security teams) and enlisted Kubernetes containers, which helped it abstract physical resources, stay agile and create development environments geared toward IoT application development.

“We started to push that DevSecOps mindset, which is about automating that software development life cycle in a secure and flexible and interoperable fashion,” Chailian said.

The DevSecOps approach embraces an “everything is code” philosophy, it helps reduce inconsistencies between, say, a test and development environment and a production environment. This everything-as-code principle builds quality control into the development process and shores up security as developers build and share environments.

“By having everything in code, everything has to go through the code review process,” Chailian said. “Then you have immutable design state in code. You reduce your attack surface,” he said.

Using Containers for IoT Development

While virtual machines have also been integral for IoT and cloud application development, today using containers for development around IoT is key to the DOD’s development posture.

Containers are self-contained runtime environments: an application – including all its dependencies, libraries and other binaries, as well as configuration files needed to run it – bundled into a single package. By containerizing an application platform and its dependencies, developers can abstract differences in operating system distributions and underlying infrastructure. Unlike virtual machines, which also abstract physical resources, however, containers require less RAM and fewer CPU resources. So containers are more lightweight and, with solid change management practices, more modular and secure.

Containers have brought greater flexibility to the DOD as it builds applications. Developers can test and run applications with greater speed and agility – without having to reconfigure the environment based on these application dependency variations. These “Lego blocks,” as Chailian referred to them, enable IoT development teams to quickly develop, test, stage and live-code elements.

Why Kubernetes Containers for IoT Development?

Chailian emphasized that the Kubernetes platform is both vendor-agnostic and self-healing – key features for mission-critical systems that use diverse tools and run in various environments.

“We wanted that abstraction layer and orchestration of containers so the same stack can run at the edge on ‘classified’ clouds, on commercial clouds, air-gapped, on-premises, on the jet, on the bomber – it doesn’t matter. And yes, we run Kubernetes on jets, and that gives us a lot of flexibility of these reusable Lego blocks,” Chailian said.

Whereas the team used to develop code with 8-t0-12-month horizons, the Lego-block approach enables DOD development teams to validate software far more quickly.

“It is completely disrupting the way we accredit software – we can do that multiple times a day as needed and move at the pace of relevance,” Chailian said.

The DOD’s experience converges with survey data that suggests technology teams are increasingly relying on containers, even in production environments and for critical applications.

According to the Cloud Native Computing Foundation, 92% of respondents say they use containers in production. That represents a 300% increase from the foundation’s first survey in March 2016. Additionally, 91% of respondents report using Kubernetes, 83% of them in production.

Moreover, a 2018 IDC study found that 76% of survey respondents were making use of containers within mission-critical applications.

For core systems like those the DOD has developed, resiliency is key. “The same stack can run at the edge. We picked Kubernetes because it has the resiliency of self-healing of containers while also bringing that automated security,” Chailian said.

Moving to Microservices for Container Development

Mission-critical services, further, require a microservices approach in which containers are loosely coupled through a service mesh to prevent code inconsistencies, security breaches and change management problems.

Unlike more traditional, monolithic applications that primarily focus on incoming traffic to a single application instance, microservices need to consider incoming traffic to many application instances and manage the traffic between these coupled services. A service mesh manages this east-west traffic, enabling developers to build apps more quickly.

“Microservices is the best way to scale,” Chailian said. “If you have different teams, you don’t want to have to share libraries and update these bits inside containers,” he said.

By implementing microservices, the DOD can handle rapid changes and also battle-test its systems for resiliency. Known as “chaos engineering,” this intentional-failure testing is integral to the DOD’s various mission-critical systems.

“We crash things on purpose to see how resilient the system can be. … This is particularly important for critical systems,” Chailian said. He noted that streaming provider Netflix has more than 700 microservices but only a core 20 are required to watch a movie.

Challenges With Using Containers for Development

Still, configuring containers can be problematic. “IT teams fail to configure their containers properly, potentially leaving them exposed and creating significant security risks for their organization,” said Nathaniel Quist, senior threat researcher at Palo Alto Networks, in an article on the pros and cons of containerization. A security threat in one container can jeopardize others, since containers share operating systems.

Containers’ ephemerality is also an issue. While containers help with projects that require fast setup modes, containers are ultimately better for tasks with shorter life cycles. Virtual machines have a longer life cycle than containers and are best used for longer periods of time.

At the same time, given the number of platforms and environments, Chailian said that containers allow teams to forge ahead with development without compromising security updates and patches.

“We have no drift between environments,” he said. “If you were running them as VMs, you would have to update these VM separately, and it would become a nightmare quickly. By running these containers as a containerized stack, we can centrally update among dev/test, staging and production, and now we’re not going to get behind in updating and patching these Lego blocks.”

Service Mesh as the Glue for Containers, Microservices

The ability to develop at the pace of business, without undercutting security or code quality, requires an approach that differs from traditional, monolithic application development.

“Once you move from a monolithic application to smaller containers, this communication between services will have to be managed, so a service mesh is entirely foundational,” Chailian said.

Ultimately, a service mesh is the referee that enables developers to develop consistent and secure code that can test the limits of DOD systems through chaos engineering.

Ultimately, Chailian said, this everything-as-code approach, combined with microservices, frees developers to focus on their primary goal rather than getting mired in building test environments. Everything as code, he said, is critical to IoT development success.

“There is no chance for any meaningful software to be deployed in a successful, continuous fashion if you’re not embracing the concept of a service mesh,” Chailian said. “That lets the development teams focus on their mission: [to build] software, not build all that foundational layer, platform stuff. They can simply inherit it.”

About the Author(s)

You May Also Like