Nvidia Upgrades its Flagship AI Chip as Rivals Circle

Nvidia unveiled the H200, which boasts more memory and faster processing than the H100 for AI workloads

.png?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- Nvidia unveiled an upgrade to its flagship H100 AI chip. Called H200, it boasts more memory and faster processing.

- The three largest cloud providers will deploy H200 starting in 2024: AWS, Azure and Google Cloud.

- AMD and Intel are getting ready to ship their own rival AI chips this year to take advantage of an Nvidia GPU shortage.

Nvidia Monday unveiled an upgrade to its flagship AI chip – the H100 − to fend off budding rivals to its dominance in the GPU market.

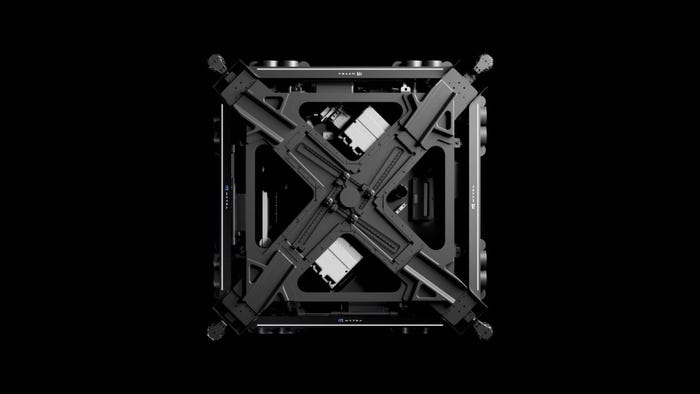

The world’s most valuable chipmaker announced the H200, which will use high bandwidth memory (HBM3e) to handle massive datasets for generative AI and other intense AI computing workloads.

Nvidia said the H200 is the first GPU to offer HBM3e. It has 141GB of memory delivered at 4.8 terabytes per second, or almost double the capacity and 2.4 times more bandwidth than its predecessor, the A100. The H100 supports 120GB of memory.

The chipmaker said the H200 would nearly double the inference speed on the 70 billion-parameter model of Meta’s Llama 2 open source large language model, compared to the H100. Nvidia said the H200 will see further improvements with software updates.

Nvidia said AWS, Microsoft Azure, Google Cloud, Oracle Cloud, CoreWeave, Lambda and Vultr will deploy the new chip starting in 2024.

AMD, Intel chase Nvidia

The news comes as Nvidia rivals unveiled chips that aim to challenge its GPU dominance especially since there is a shortage of its GPUs due to high demand.

AMD is expected to start shipping its MI300X chip this year, which offers up to 192GB of memory. Big AI models need a lot of memory because they do a lot of calculations. AMD had showcased a demo of the MI300X chip by running a Falcon model with 40 billion parameters.

Intel is coming out with AI-powered PC chips called Meteor Lake in December. It is a chiplet SoC design − small and meant to work with other chiplets. Meteor Lake represents Intel’s first dedicated AI engine that is integrated directly into an SoC to “bring AI to the PC at scale,” the company had said.

SambaNova Systems has its SN40L chip, which it said can handle a 5-trillion parameter model and support over 256k sequence length on a single system for better quality and faster outcomes at a lower price.

Nvidia's H100 is expensive, often costing more than $30,000 per chip.

This article first appeared in IoT World Today's sister publication AI Business.

Read more about:

AsiaAbout the Author(s)

You May Also Like

.png?width=100&auto=webp&quality=80&disable=upscale)

.png?width=400&auto=webp&quality=80&disable=upscale)