Language Model Enhances Road Navigation, Provides Commentary

Wayve’s AI system is designed to improve driving behavior of autonomous vehicles

.png?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

British autonomous vehicle startup Wayve has developed an AI system that combines a language model with visual data to better navigate roads while explaining its decisions in real time.

LINGO-2 is a vision-language-action model (VLAM), that combines language with vision capabilities. It can perceive the world around it while controlling the vehicle.

The latest iteration of the LINGO-1 model, which was released in November 2023, was designed to improve the driving behavior of an autonomous vehicle.

The model processes images from a vehicle’s cameras, feeding that data along with information about a road’s speed rules into a language model to provide a continuous commentary of its driving decisions.

For example, it can explain that it is slowing down for pedestrians on the road or executing an overtaking maneuver.

The model can also be prompted to perform commands like “pull over” or “turn right.” LINGO-2 can also predict and respond to questions about the road ahead and the decisions it is making while driving.

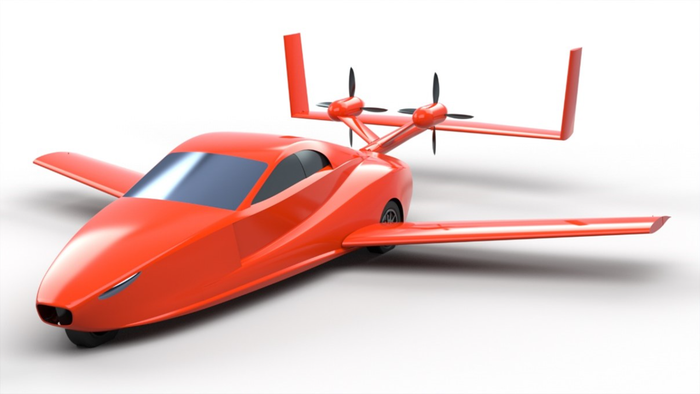

Wayve conducted the first VLAM test on a public road, deploying LINGO-2 on a drive through central London.

LINGO-2 followed a route and was able to change lanes, reduce speed to adjust to traffic conditions, instruct on how to safely pass a bus and stop at red lights.

LINGO-2’s underlying mechanics combine Wayve’s vision model with an auto-regressive language model.

The visual model processes camera images into a sequence of tokens, combining those tokens with variables like current speed and the active route which are fed into the language model.

The language model, trained to predict a driving trajectory then produces commentary text in which the car’s controller then performs the next driving action.

.png?width=700&auto=webp&quality=80&disable=upscale)

Credit: Wayve

The startup said models like LINGO-2 that combine visual capabilities with language “opens up new possibilities for accelerating learning with natural language.”

“Natural language interfaces could, even in the future, allow users to engage in conversations with the driving model, making it easier for people to understand these systems and build trust,” according to a Wayve blog post.

Wayve plans to conduct further research on the safety of controlling the car’s behavior with language.

Having conducted off-road tests in its virtual simulator platform Ghost Gym, Wayve wants to conduct more real-world experiments to ensure it's safe to use on the roads.

Founded in 2017, Wayve’s backers include Microsoft, online supermarket Ocado, Virgin Group founder Sir Richard Branson and the inventor of the internet Sir Tim Berners-Lee.

This article first appeared in IoT World Today's sister site, AI Business.

About the Author(s)

You May Also Like

.jpg?width=100&auto=webp&quality=80&disable=upscale)

.jpg?width=400&auto=webp&quality=80&disable=upscale)