Google Releases AI-Learning System to Make Robots Smarter

The novel system helps robots make decisions based on their own experience, as well as web-searched data

Google’s robotics team, DeepMind, has released a new AI-learning system that can make robots smarter.

The new AI model, Robotic Transformer (RT-2), is the latest version of what Google is calling its vision-language-action (VLA) model.

These VLA models, in essence, give robots better reasoning and common sense capabilities. By giving them access to a greater berth of both web- and experience-based information, the robots are more capable of understanding instructions and selecting the best objects to meet requested actions.

Vincent Vanhoucke, head of robotics at Google DeepMind, cited an example of a robot being asked to throw out trash. In existing models, a user would have to train the robot to understand what trash is before it can perform the task. With RT2, the robot has access to web and experience data for it to define trash for itself and perform the task with a greater level of autonomy than before.

DeepMind unveiled the first iteration of the system, RT1, last December. Using this first model, the company trained its Everyday Robot systems to perform tasks like picking and placing objects and opening draws.

In a blog post, the team said RT2 has “improved generalization capabilities” compared to RT1, with a wider understanding of verbal and visual cues. According to DeepMind, RT2 learns from web and robotics data and “translates this knowledge into generalized instructions for robotic control.”

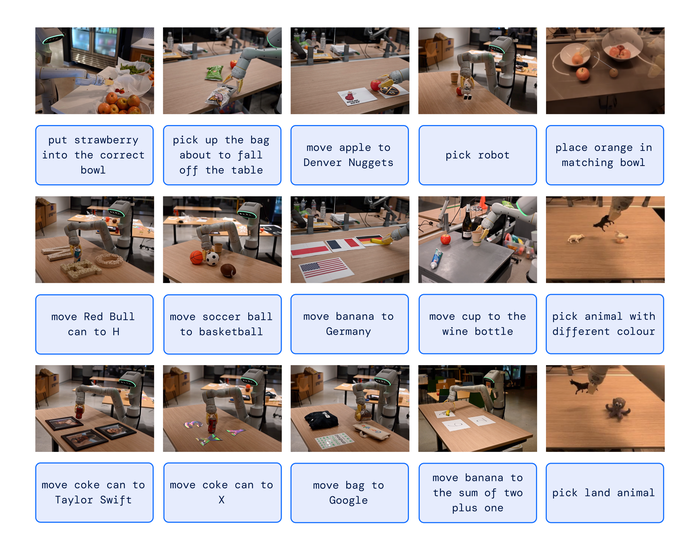

The team conducted more than 6,000 robotic trials, testing RT2 on a robotic arm in a kitchen office scenario. The robot was asked to make decisions on how to best perform certain tasks, with each requiring the robot to combine knowledge from its own experience and web-searched data.

Credit: Google DeepMind

“We show[ed] that incorporating chain-of-thought reasoning allows RT-2 to perform multi-stage semantic reasoning, like deciding which object could be used as an improvised hammer (a rock), or which type of drink is best for a tired person (an energy drink),” the team wrote.

The team said the robots’ efficacy in executing new tasks improved from 32% to 62% with the shift from RT-1 to RT-2.

“We…defined three categories of skills: symbol understanding, reasoning, and human recognition,” the team said. “Each task required understanding visual-semantic concepts and the ability to perform robotic control to operate on these concepts…Across all categories, we observed increased generalization performance (more than 3x improvement) compared to previous baselines”

According to Vanhoucke, the novel system has potential applications in general-purpose robotics.

“Not only does RT-2 show how advances in AI are cascading rapidly into robotics, it shows enormous promise for more general-purpose robots,” he said. “While there is still a tremendous amount of work to be done to enable helpful robots in human-centered environments, RT-2 shows us an exciting future for robotics just within grasp.”

About the Author

You May Also Like