New Chip Designs to Boost AI Workload Processing

New chip designs are changing the way AI workloads are processed, including an analog chip that improves computer vision

November 14, 2023

.png?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- New chips are being designed to more efficiently process AI workloads, according to Nvidia and other experts.

- One example is an analog chip that speeds up and improves computer vision, from researchers at Tsinghua University.

New chip designs could soon revolutionize AI by using innovative ways to handle generative workloads more efficiently.

“When it comes to machine learning, keeping up with the requirements of AI/ML workloads, both in terms of hardware and software, is paramount,” Siddharth Kotwal, Quantiphi's global head of Nvidia practice, said in an interview. “The potential hardware opportunities revolve around developing workload-specific AI accelerators/GPUs to cater to the specialized needs of enterprises.”

General-purpose microprocessors like those from Intel and AMD offer high performance for a broad spectrum of applications, Ben Lee, professor at the University of Pennsylvania’s Penn Engineering, noted in an interview. However, he said that chips customized for specific application domains, such as AI, can offer much greater performance and energy efficiency.

“First, they optimize the movement of data into and within the processor, reducing the number of energy-intensive data transfers,” he added. “Second, they create large custom instructions that perform much more work per invocation, which allows the chip to amortize the energy costs of supplying data for those instructions. Computer engineers often use a rule of thumb: custom chips tailored for an application domain can improve performance and energy efficiency by two orders of magnitude, i.e., 100x.”

One promising area of research is processing-in-memory (PIM), which couples emerging memory technologies with analog computation, Lee said. The memory technology includes a programmable resistor that can be used to represent a machine-learning model’s parameters or weights.

“As current flows through these programmed resistors, the memory can implement multiplications and additions that form the basis for many machine learning computations,” he added. “PIM offers much greater efficiency because computation is embedded within the data, eliminating the need to move large volumes of data across long distances to the processor.”

Kotwal said there’s likely to be a growing demand for Edge GPUs, especially for edge inference, necessitating GPUs from companies like Nvidia, Arm, Qualcomm, and others in the SoC or mobile domains.

Minimizing Interference

University of Southern California researchers have recently developed a method that allows devices to minimize interference for AI tasks. The innovation supposedly boasts an unprecedented information density, storing 11 bits per component, making it the most compact memory technology to date. These tiny yet mighty chips could be game-changers, fitting into our mobile devices and significantly boosting their capabilities.

New NPUs, ASICs and FPGAs designed for AI workloads can be much more efficient and cost-effective, Robert Daigle, Lenovo’s director of Global AI, said in an interview. He predicted that AI accelerators will become more specialized for specific use cases – for example, new accelerators are designed specifically for Computer Vision Inference, Generative AI Inference, and training.

The latest chip designs are integrating capabilities to operate in liquid-cooled environments, marking a shift towards more sustainable energy practices, Daigle said. A key design priority is minimizing energy consumption and enhancing heat dispersion.

The evolution of AI accelerators is branching into two distinct trajectories: discrete, purpose-built accelerators and AI cores integrated into multipurpose silicon-like CPUs, Daigle noted. This convergence of advanced, efficient silicon, innovative liquid cooling techniques, and streamlined AI code within robust frameworks is poised to amplify the potential of new AI models and solutions.

“Soon, chips will help lead the way in sustainability efforts to drive peak AI performance results while reducing and reutilizing energy consumption,” he added. “AI will continue to advance and become more complex; advancing chip design will help lead the way in that evolutionary process. We can expect to see significant power consumption reduction, acoustic improvements, and cost savings.”

AI in Computer Vision

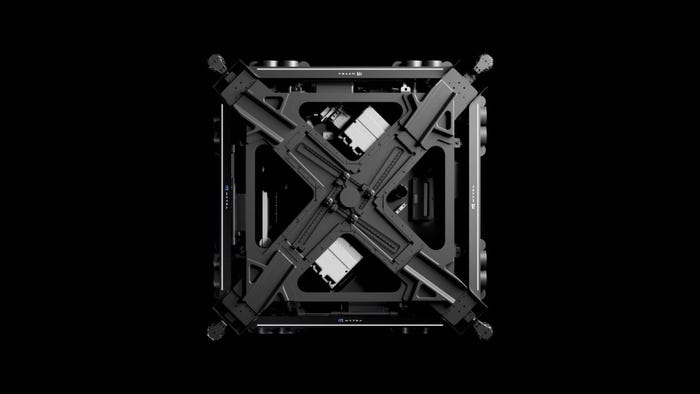

One recent innovation comes from researchers at Tsinghua University in China. They said they have created an entirely analog photoelectric chip that integrates optical and electronic computing for rapid and energy-efficient computer vision processing.

Analog and digital signals are two ways of transmitting information. Analog signals, like light forming an image, change continuously, while digital signals, like binary numbers, are not continuous.

In tasks like image recognition and object detection in computer vision, it often starts with analog signals from the environment. To process them with AI neural networks, which are trained to find patterns in data, the analog signals must be converted into digital ones. The conversion takes time and energy, which can slow down the neural network. Photonic computing, using light signals, is a promising solution.

In their paper published in Nature, the researchers created an integrated processor combining the benefits of light and electricity in an all-analog way. They call it ACCEL, short for "all-analog chip combining electronic and light computing."

"We maximized the advantages of light and electricity under all-analog signals, avoiding the drawbacks of analog-to-digital conversion and breaking the bottleneck of power consumption and speed," said Fang Lu, a researcher from the Tsinghua team, in a news release.

This article first appeared in IoT World Today's sister publication AI Business.

Read more about:

AsiaAbout the Author(s)

You May Also Like