How to Bring Intelligence to the Edge as Simply as Possible

At Embedded World 2024 and Hannover Messe, companies in embedded applications and industrial sectors showcased how their products are advancing with AI

.jpg?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

April saw two events bringing attention to artificial intelligence (AI) in embedded applications. First, Embedded World 2024, held from April 9-11 in Nuremberg, Germany and then Hannover Messe, which took place from April 22-26 outside Hanover, Germany. These two events provided a chance for companies active in embedded applications and the industrial space to demonstrate how their products and services are keeping up with the rapid growth of AI.

AI Is Becoming a Feature of Products at the Edge

While other recent shows such as Mobile World Congress 2024 and CES 2024 featured significant emphasis on the deployment and integration of cloud-based AI such as Large Language Models (LLMs) and other models from generative AI (GenAI), Embedded World and Hannover Messe were both notable for the focus on AI practiced at the edge, embedded in the device and not reliant on a cloud connection for access to the model. This is also important as it represents a transition toward inference instead of just training—an important step in the deployment of active AI models. For AI deployed at the edge, the AI is a component of a chip designed primarily for other purposes, with the AI assisting it—this is a different design and sales proposition than that for the large AI chips designed specifically to run LLMs.

Edge AI is important because it represents AI performing a comparatively narrow, focused, and measurable task, rather than more open-ended output from LLMs, for example. However, just as LLMs and new generations of GenAI require dramatically more advanced processors to run massively complex models, so embedded AI models require more advanced processors, be it AI on an applications processor, a co-processor, or on a graphics processing unit (GPU) used as an accelerator.

However, the crucial difference is that by their nature many, likely most, edge devices are limited by power and size requirements. As a consequence, the tasks AI is asked to perform in embedded applications must be limited and focused. This requires models that are equally focused, rather than the large, open-ended models with billions or trillions of parameters.

This means that while both enterprise AI and edge AI are often grouped together, they are in many ways different propositions with different needs and which require different approaches. Omdia believes there has been a tendency in the industry to look at edge AI as just a smaller version of enterprise AI. The differences in requirements and resources however mean AI in embedded applications is not and cannot be just a scaled-down enterprise AI and will be easier to develop and integrate if the root of its development comes from those vendors already familiar with the needs of embedded applications; that is, embedded companies need to design embedded solutions.

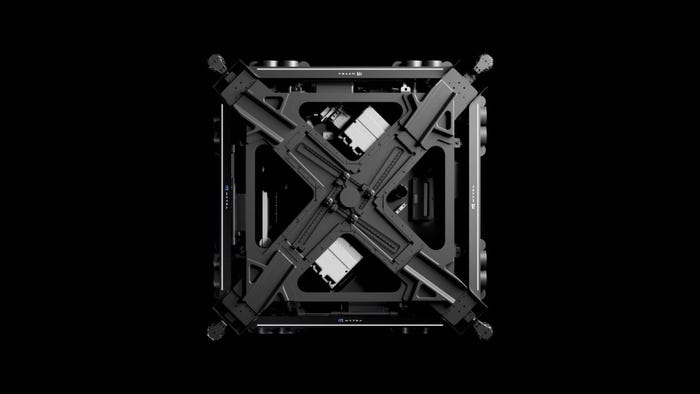

Some of these embedded AI products include the impressive new Astra platform from Synaptics, which offers clients the option of selecting among an array of processors, AI tools, connectivity options, and partner solutions. This gives partners a manageable list of options for customizing the System-on-Chip (SoC) for their needs, as well as to simplify upgrading a design if for example a different processor or connectivity is needed in the future. Synaptics’ Astra platform demonstrates the value of an IoT company designing IoT solutions at the intelligent edge. Similarly, AMD is well-positioned to bridge data center and edge AI, as their sizable adaptive compute line (driven in no small part by their acquisition of Xilinx) gives them strength in the embedded space alongside their sizable x86-based presence running LLMs and other cloud-based AI.

Advanced Processing Is Also Pushing the Growth of CPUs

Omdia’s Applications Processors in the IoT – 2024 Analysis, released in February, gives a thorough overview of the growing market for added compute in embedded applications, as the growth in AI corresponds to a general increase in processing capacity in the IoT, with some vendors even choosing to perform edge AI inference on the central processing unit (CPU) rather than on a co-processor or accelerator. Omdia has also found that for those using a co-processor or accelerator, an applications processor is increasingly required to perform the many administrative tasks, including those relating to security and connectivity, that an AI-enabled embedded chip requires. A number of advanced-performance, power-sensitive chips were on display at Embedded World, including NXP’s growing line of MCX crossover MPUs, and GPU firm Imagination’s RISC-V based Catapult MPU, which offers developers the option of boosting performance or efficiency. As seen in Figure 1 below, Omdia forecasts revenue from applications processors in the IoT to grow by 11.5% a year from 2023 to 2030. Over this period, Omdia predicts applications processors will grow from 25% of processor revenue to over 35% of processor revenue in the IoT.

.png?width=700&auto=webp&quality=80&disable=upscale)

Embedded AI and ML Are Continuing to Grow in Capability, Not Just Volume

STMicroelectronics also demonstrated several Edge AI devices—the company has begun to refer to these devices as employing “Embedded AI" rather than Edge AI. STMicroelectronics Embedded AI devices offer a level of performance above what has traditionally been offered at the data edge, where machine learning (ML) has normally been used as a shorthand for narrowly focused and very low power solutions. While these ML products usually make for excellent demonstrations, they have often struggled to produce usable results in real-world deployments, as they are less confident with messy or confounding data. A more robust and powerful AI processor, though one remaining sensitive to the power limitations of an edge device, is required to perform embedded AI that can solve problems in the embedded space.

Arm also demonstrated its commitment to a capable embedded AI platform with updates to its Helium vector extensions for the catalog of leading-edge v8 embedded architecture. Several Arm partners were demonstrating how Helium can enable more efficient advanced processing, including Infineon’s PSOC Edge E8x MCUs and Alif Semiconductor’s Balletto MCU, which also features support for the Matter smart home protocol. Like those two products, Ambiq's Apollo510 is based on Arm’s 64-bit Cortex-M55, and was awarded an Embedded World “Embedded Award” for outstanding innovation in hardware. With the Helium extension maximizing the processing power of the chip, the Apollo510 has improved AI and ML capabilities compared to its predecessors. The popularity of the Cortex-M55 with Helium for ML and Edge AI in a variety of applications is another strong indication of Arm’s continued strength at the intelligent edge. Omdia’s AI Processors at the Edge, updated for 2024, has additional details on the market for embedded AI processing.

Edge Impulse followed its recent appearance at the Nvidia GPU Technology Conference (GTC) in March with a sizable presence at both Embedded World and Hannover Messe. Edge Impulse’s low-code, device-neutral tools enable end-to-end development and support for AI and ML in embedded applications. In conversation with Omdia, Edge impulse CEO Zach Shelby confirmed that the definition of TinyML had changed, with the TinyML Foundation now encompassing all of edge AI, essentially bringing together everything outside of the cloud and data center—making the distinction between enterprise AI and embedded AI a matter of location, rather than size. This succeeds in eliminating the confusing distinction between ML and edge AI but potentially adds confusion in conflating everything from data-edge ML done on an ultra-low-power MCU, such as that demonstrated by TDK’s Qeexo at Embedded World, and GPU-driven edge appliances running LLMs. Omdia’s report TinyML: Technology, Players, Strategies will be published in June and will offer more in-depth coverage of these applications including their definitions and scope.

The Future of Embedded AI Lies in a Finely Tuned Balance Between Device and Data Center

The Embedded AI demonstrated at both Embedded World and Hannover Messe represents a single perspective; while the offloading of compute and intelligence to the edge is frequently discussed in binary terms (either the processing is in the cloud or it is at the edge), in reality, virtually all intelligent devices and networks will be hybrid. Edge processing is fast and secure, but models need to be evaluated and refreshed, and deployments will need to learn from each other, all of which require access to the cloud.

The future of Embedded AI as a consequence is balanced between edge and cloud, between real-time, application-focused acceleration to manage specific and defined operations, and cloud-based model development, management and maintenance. The macro and the micro will find a balance through iteration, with trade-offs made between power and performance, speed and connectivity, server space and local processing.

About the Author(s)

You May Also Like

.jpeg?width=100&auto=webp&quality=80&disable=upscale)

.jpeg?width=400&auto=webp&quality=80&disable=upscale)